Floating point arithmetic can sometimes be frustratingly unstable, particularly when applied to large datasets. Even though the classic What Every Computer Scientist Should Know About Floating-Point Arithmetic seems to make the front page of of Hacker News on a yearly basis (1, 2, 3, 4, 5, 6), I have never seen any big data package actually apply one of the simplest and cheapest recommendations from it.

I’m talking about the Kahan Summation algorithm. Maybe it gets ignored because it’s covered half-way through the paper. Despite being buried, you can tell it’s important because the author uses uncharacteristally strong language at the end of the section on the algorithm:

Since these bounds hold for almost all commercial hardware, it would be foolish for numerical programmers to ignore such algorithms, and it would be irresponsible for compiler writers to destroy these algorithms by pretending that floating-point variables have real number semantics.

Whoa. Let’s not be foolish!

Example: The Harmonic Series in Clojure

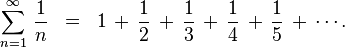

We’re going to be computing a partial sum of the Harmonic Series:

It’s another nice example because it contains terms that can’t be represented precisely in floating point and the true sum diverges.

Let’s start by computing the sum with infinite precision. Clojure’s Ratio class represents values internally using BigInteger to separately store the numerator and denominator. The summation happens using the grade-school style of making the denominators match and summing the numerators, so we have the exact running sum throughout. At the very end, we convert the number to a floating point double:

1 2 3 4 5 6 7 | |

For the first 10,000 elements, we’ll see numerical differences starting at the 14th decimal place, so just focus on the last two digits in the results.

As expected, we see a slightly different result if we compute the sum of doubles:

1 2 3 4 5 6 7 | |

One approach that will get more accurate results is to use an arbitrary precision representation of the numbers, like BigDecimal. If we naively try to convert harmonic-ratios to BigDecimal, we get an ArithmeticException as soon as we hit 1/3:

1 2 3 4 5 6 7 8 | |

We need to explicitly set the precision that we want using a MathContext. Let’s use 32 decimal places for good measure:

1 2 3 4 5 6 7 8 9 10 11 | |

Now, let’s see how Kahan Summation algorithm performs on doubles:

1 2 3 4 5 6 7 8 | |

Everything but vanilla summation of doubles has given us the same answer!

To be fair to doubles, we are summing them in what intuitively is a poor order. The smallest values are being added to the largest intermediate sums, preventing their low-order bits from accumulating. We can try to remedy this by reversing the order:

1 2 | |

Well, that’s different. This is the first time we’re seeing the floating point noise lead to something larger than the infinite precision answer.

Conclusion

For just a couple additional floating point operations per element, we get a result that competes with the more expensive arbitrary precision solutions. It also does better than the naive approach of pre-sorting, which is both more expensive and eliminates the ability to deal with the data in a streaming fashion.

In a subsequent post, I plan on covering how Kahan Summation can be used effectively in a map-reduce framework.